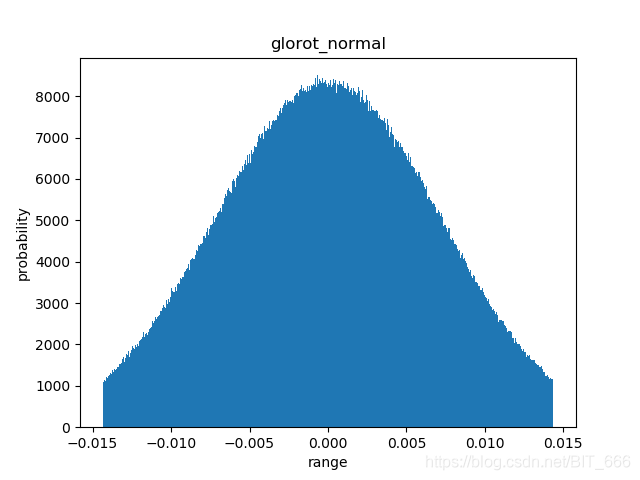

python - ¿Cómo puedo obtener usando la misma seed exactamente los mismos resultados usando inicializadores "manualmente" y con keras? - Stack Overflow en español

he_uniform vs glorot_uniform across network size with and without dropout tuning | scatter chart made by

neural networks - All else equal, why would switching from Glorot_Uniform to He initializers cause my loss function to blow up? - Cross Validated

The influence of Lake Okeechobee discharges on Karenia brevis blooms and the effects on wildlife along the central west coast of Florida - ScienceDirect

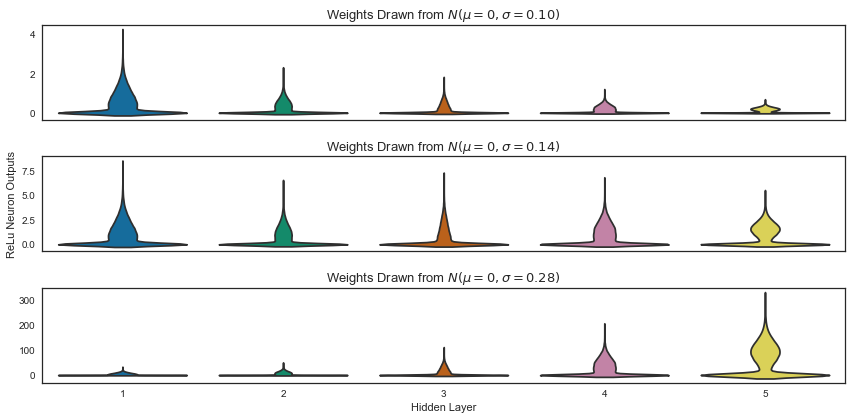

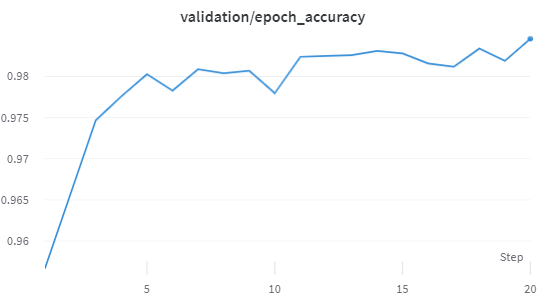

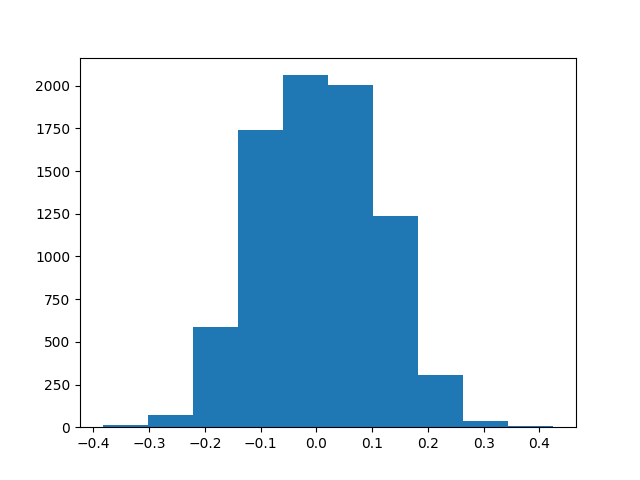

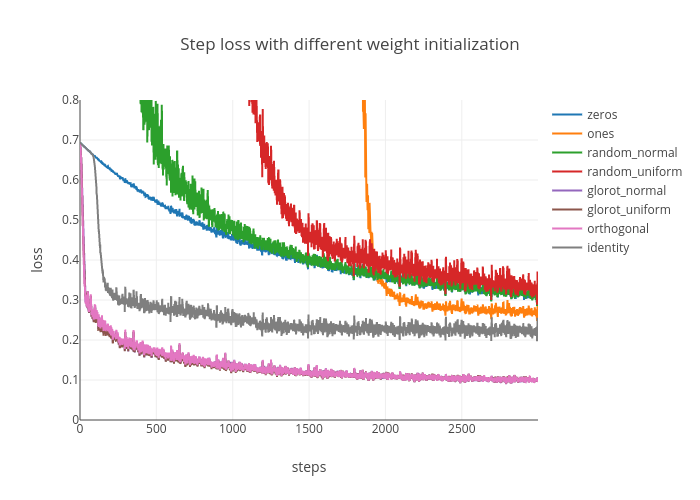

Priming neural networks with an appropriate initializer. | by Ahmed Hosny | Becoming Human: Artificial Intelligence Magazine

neural networks - All else equal, why would switching from Glorot_Uniform to He initializers cause my loss function to blow up? - Cross Validated

Practical Quantization in PyTorch, Python in Fintech, and Ken Jee's ODSC East Keynote Recap | by ODSC - Open Data Science | ODSCJournal | Medium

Priming neural networks with an appropriate initializer. | by Ahmed Hosny | Becoming Human: Artificial Intelligence Magazine

Why is glorot uniform a default weight initialization technique in tensorflow? | by Chaithanya Kumar | Medium